Dive deep into ways to load big data sets into MySQL with BreachDirectory. From MySQL INSERT operations to LOAD DATA INFILE: here’s everything you need to know.

Preface

Loading data into a database is one of the main parts of ETL. Depending on your specific use case, you might find yourself using these processes more or less often; regardless, these processes are key to importing any kind of data into a database.

When it comes to MySQL insert operations, almost every developer knows what they do, but not everyone knows how to optimize them. There are even fewer developers who know how to perform MySQL insert operations with billions of records. This is what we’re talking about today.

MySQL INSERT Operations Demystified

Once developers need to insert data into their MySQL instances, there is one query that they all turn to and that’s the MySQL INSERT statement. The MySQL INSERT query then often looks like so:

INSERT INTO `demo_table` (`col1`, `col2`, `col3`) VALUES (“Demo”, “Demo Value”, “Some Data”);

The SQL query above would insert a single row into a table named demo_table. Logically, to insert more rows into a table, you could make use of more INSERT statements like so:

INSERT INTO `demo_table` (`col1`, `col2`, `col3`) VALUES (“Demo”, “Demo Value”, “Some Data”);

INSERT INTO `demo_table` (`col1`, `col2`, `col3`) VALUES (“Value”, “Another Value”, “Data”);

INSERT INTO `demo_table` (`col1`, `col2`, `col3`) VALUES (“Data”, “Data 2”, “Data 3”);

…

However, such an approach is often ill-advised due to the strain INSERT queries pose to our databases. Instead, developers often turn to the bulk-importing capabilities of the MySQL INSERT statement. Such statements often look like so:

INSERT INTO `demo_table` VALUES (“Data”, “Value”, “Another Value”), (“Row Value”, “Good”, “Great”), (“Bad”, “Good”, “Perfect”), …;

Such a MySQL INSERT statement is known as a bulk insert statement. It is called that way because multiple rows that are bound to get inserted are bundled together and executed in one go, thus lessening the work our database needs to perform and completing the SQL query faster in the process.

The Internals of the MySQL INSERT Query

These kinds of MySQL INSERT statements lessen the strain on our database when we insert data because once bundled, they enable our database to skip certain steps in the query execution plan displayed below:

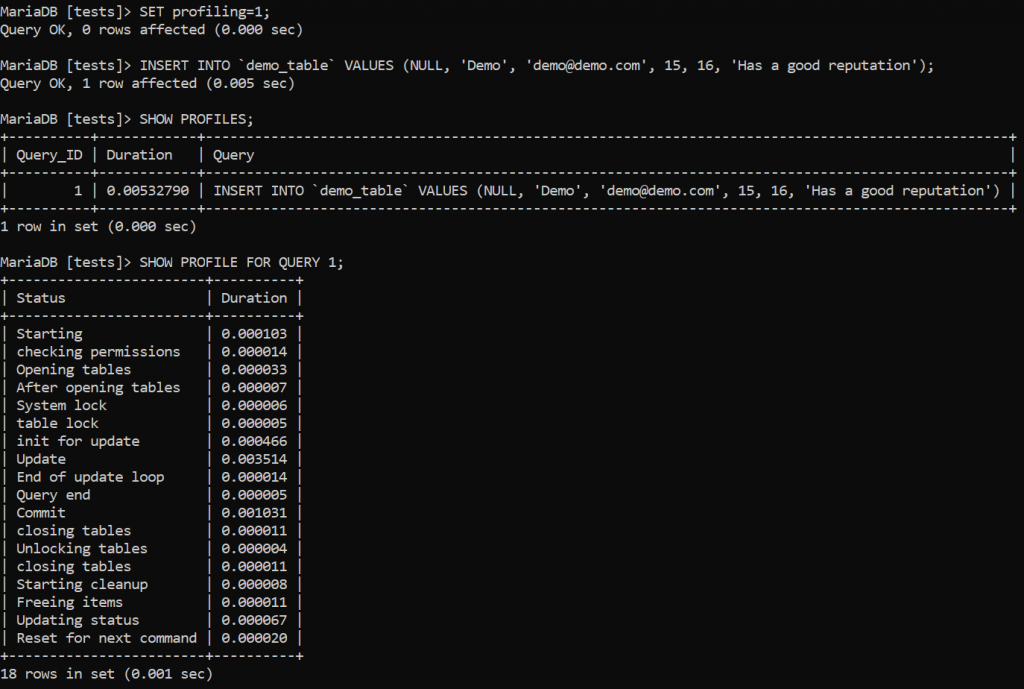

Above, we‘ve done the following:

- Enabled profiling (profiling a MySQL

INSERTquery enables us to observe the duration of the steps taken for the query to execute.) - Ran a MySQL

INSERTquery that inserted one row into a table called demo_table. - Observed the internal steps taken by MySQL to execute our

INSERTquery.

If we observe the steps taken by our MySQL INSERT query, we can see that internally, MySQL has taken 18 steps of interest to our database (and ourselves):

StartingChecking permissionsOpening tablesAfter opening tablesSystem lockTable lockInit for updateUpdateEnd for update loopQuery endCommitClosing tables(two of such values)Unlocking tablesStarting cleanupFreeing itemsUpdating statusReset for next command

Newer versions of MySQL may have a different result set – there may be more items such as “Executing hook on transaction” and “waiting for handler commit”, but the core premise is the same. Here’s what these steps entail:

Startingrefers to the process of our database initializing the MySQL INSERT query.Checking permissionsmeans that MySQL is checking whether appropriate permissions to run the query have been set. If there aren’t enough permissions, the process terminates here.Opening tablesrefers to MySQL opening tables for further operations.After opening tablesorInitrefers to our database performing initalization processes such as flushing the InnoDB and binary log files.System lockrefers to the database waiting for the system lock on the table to be released if it’s in place.Table lockrefers to the database waiting for the table lock on the table to be released if it’s in place.Init for updateorOptimizingrefers to the processes performed by our database to determine how best to execute a given SQL query.Updatemeans that our database is preparing to run the MySQLINSERTquery and that the SQL query is being executed.End of update looprefers to the end of the data updating process.Query endrefers to the query competing its course.Commitrefers to our database saving – or committing – the results that have been obtained.Closing tablesmeans that the tables that were opened by the query are now being closed.Unlocking tablesmeans that MySQL is unlocking tables for further operations on them to be performed.Starting cleanuprefers to our database freeing items from the threads used to execute our SQL query and starting the cleanup process.- Freeing items refers to our database “releasing” the threads and items being held by itself for other processes to peruse.

Updating statusmeans that our database is updating the query status in various places such as inside of the query plan.Reset for next commandmeans that our database has finished running the course of the query and is now ready for the next command (query) to be issued.

These steps are all vital for our query to complete and as you can see, each of them is responsible for different things and each of those things has to be adhered to for our MySQL INSERT query to successfully complete.

These steps are the primary reason why queries inserting hundreds of thousands or millions of records are often so slow to complete; MySQL has to complete 17 steps after each and every query is being run, and if we have 1,000,000 records to insert, there will be around 17 million steps to adhere to and complete before marking our MySQL INSERT query as “finished.” Hence the overhead – if we have 17 million steps to complete, it’s only logical that MySQL would take days, or even weeks, to complete our query, right? Overhead is the primary reason for developers working with bigger data sets ditching INSERT for LOAD DATA INFILE instead.

The Role of LOAD DATA INFILE

When we have so much overhead, it’s only logical that we want to avoid it as much as we can. We can do that by switching the MySQL INSERT query to something that doesn’t carry as much overhead together with it and that’s LOAD DATA INFILE. LOAD DATA INFILE allows us to import bigger data sets with little impact on our database when compared with the MySQL INSERT query as it comes with “bells and whistles” including, but not limited to the following abilities and functions:

- The ability to skip initial lines or columns

- The ability to transform specific columns

- The ability to load data into specified columns leaving everything else intact

- Handling duplicate key and related issues

As such, the LOAD DATA INFILE query looks like so:

LOAD DATA INFILE ‘/tmp/data.csv’ [REPLACE|IGNORE] INTO TABLE `table_name` [FIELDS TERMINATED BY|OPTIONALLY ENCLOSED BY …] [PARTITION partition_name] [CHARACTER SET charset_name];

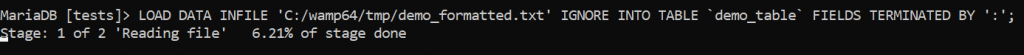

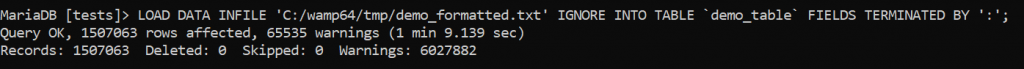

The full syntax of the LOAD DATA INFILE query can be found in the MySQL documentation, but in a nutshell, this SQL query allows us to perform a variety of different things by being built for blazing fast data insertion from text files. As previously mentioned, speed is achieved by either ignoring or eliminating overhead posed by vanilla INSERT queries and that’s why, when used properly, this SQL query can insert billions of records within hours and not within weeks or months. Take a look:

As you can see, our query that inserted 1,507,063 records into a table has been completed in just over a minute. LOAD DATA INFILE has ignored 6,027,882 warnings (we instructed it to do so – observe the query carefully), but didn‘t skip or delete any rows.

Configuration Settings to Use LOAD DATA INFILE

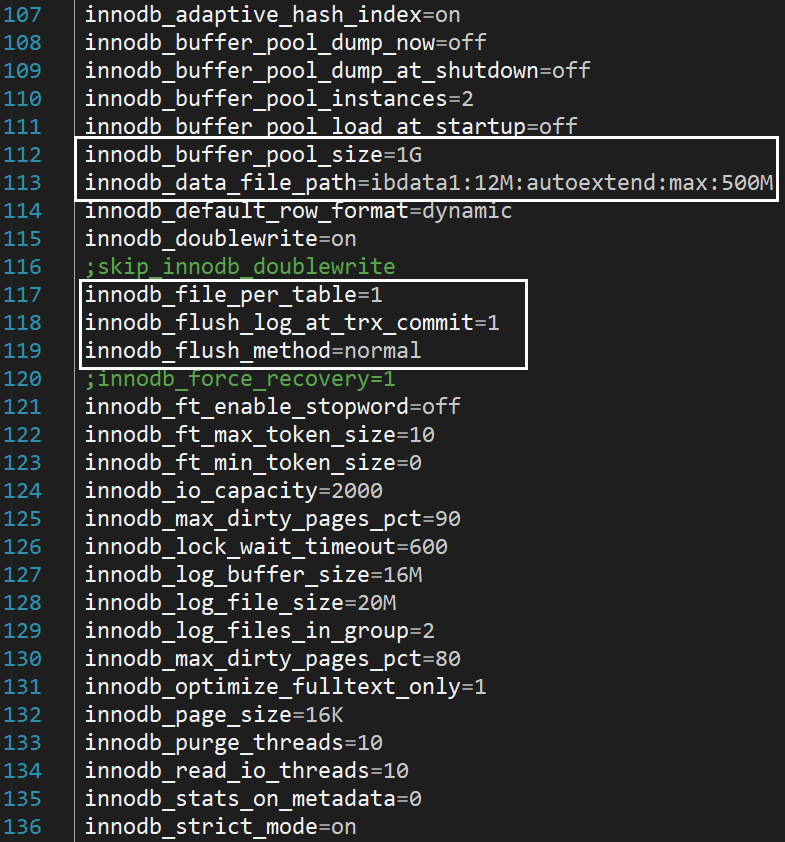

The speed of the LOAD DATA INFILE query directly depends on the configuration settings inside your database. The primary setting that is responsible for the speed of this SQL query is related to the InnoDB buffer pool size and can be modified by fiddling with the innodb_buffer_pool_size inside my.cnf (or my.ini if you find yourself running a Windows server.)

The InnoDB buffer pool size is the size of the buffer pool for the primary storage engine of MySQL – InnoDB. The buffer pool is used by MySQL for data insertion, updation, and selection and the bigger it is, the faster these operations will complete. A good rule of thumb when setting this parameter is setting it to 60-80% of the available memory on your operating system.

While the rest of the options don‘t have a direct impact on LOAD DATA INFILE operations, they are no less important: file_per_table being set to 1 ensures that our tables inside InnoDB-based databases are stored as separate files on the filesystem to prevent the main tablespace of MySQL from clutter, innodb_flush_log_at_trx_commit being set to 1 ensures our database is compliant with ACID principles, and the innodb_flush_method sets the method InnoDB (storage engine) is flushing files to disk and can have multiple options including normal on Windows or O_DIRECT on Linux-based infrastructure, and other options are responsible for other realms. Fiddle with them responsibly to obtain the best possible performance for your database.

Summary

Loading billions of records into MySQL isn‘t hard, but it cannot be feasibly done with a MySQL INSERT statement due to the fact that it comes with a lot of overhead. To load billions of rows into MySQL, optimize your database by fiddling with the settings available in my.cnf or my.ini, then switch the INSERT statement to LOAD DATA INFILE instead.

Frequently Asked Questions

Why is the MySQL INSERT Statement So Slow?

When loading millions or billions of rows into MySQL, queries can slow down due to the fact that INSERT poses a lot of overhead and comes with a lot of steps to complete before marking the SQL query as „completed.“

How to Load Billions of Rows Into MySQL?

To load billions of rows into MySQL, consider using the LOAD DATA INFILE statement.

How to Optimize MySQL for Big Data?

When optimizing MySQL for big data sets, look at the options available in my.ini or my.cnf, depending on your operating system of choice.